Getting started

To get started with the PromptIDE, first sign in using your X account on ide.x.ai. Please bear in mind the access restrictions and device recommendations laid out here.

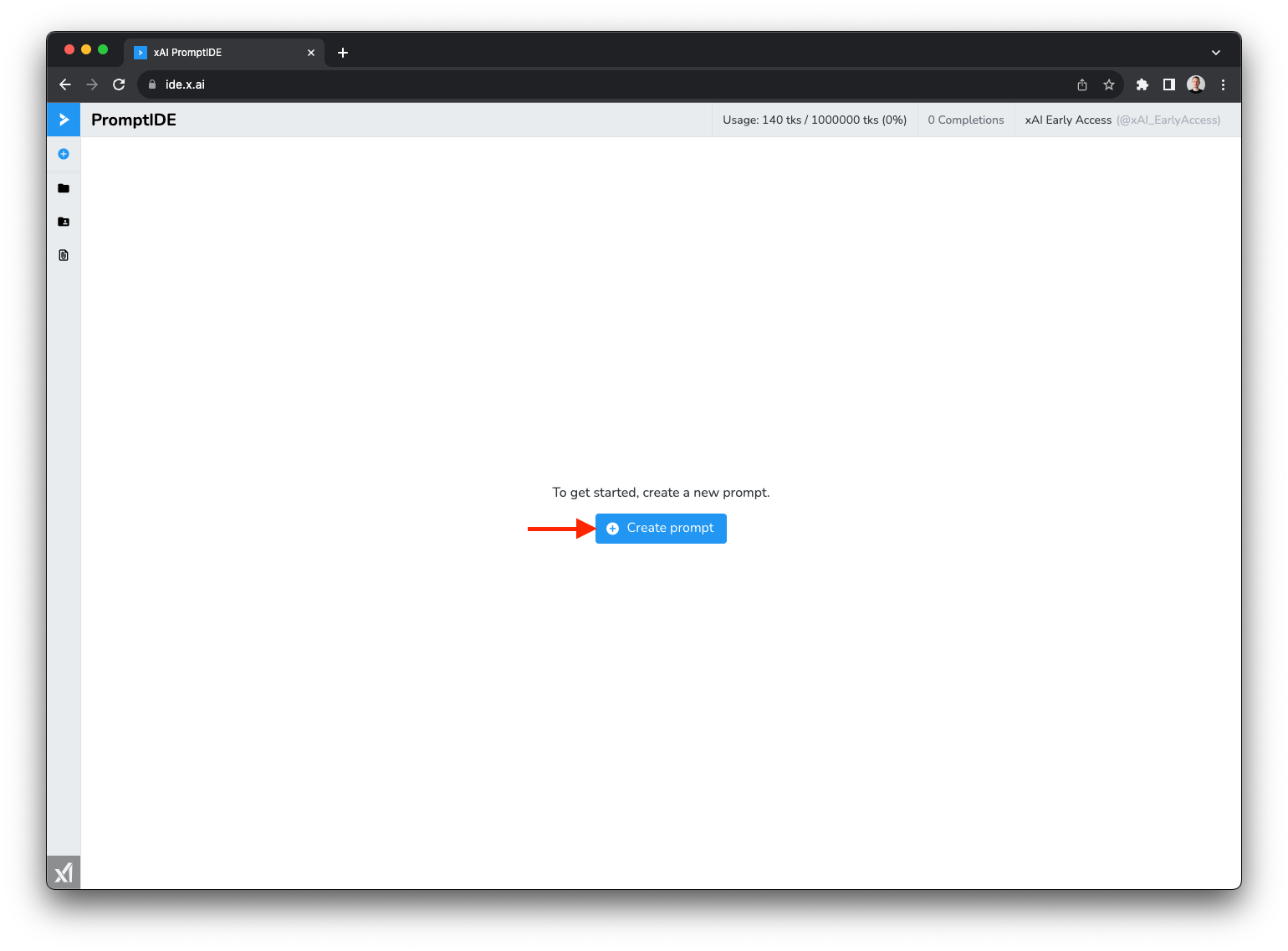

Step 1

After signing in, click the Create Prompt button to create your first prompt. A prompt in the context of the PromptIDE is nothing but a small, single-file Python program that comes with the PromptIDE SDK preloaded.

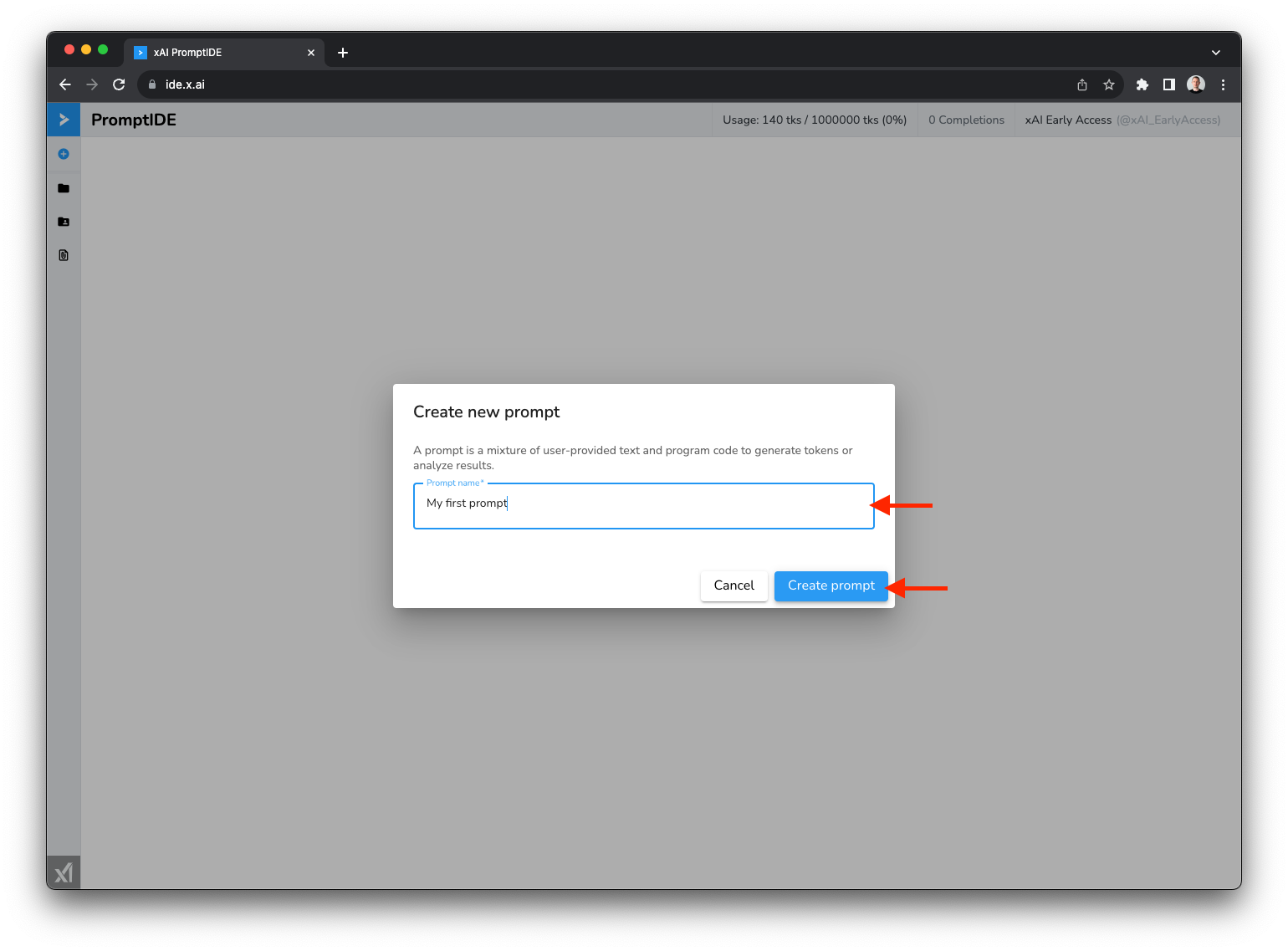

Step 2

When the dialog window opens, enter a name for your first prompt and click the Create Prompt button. The name you enter is only for your own organization, and you can change it later.

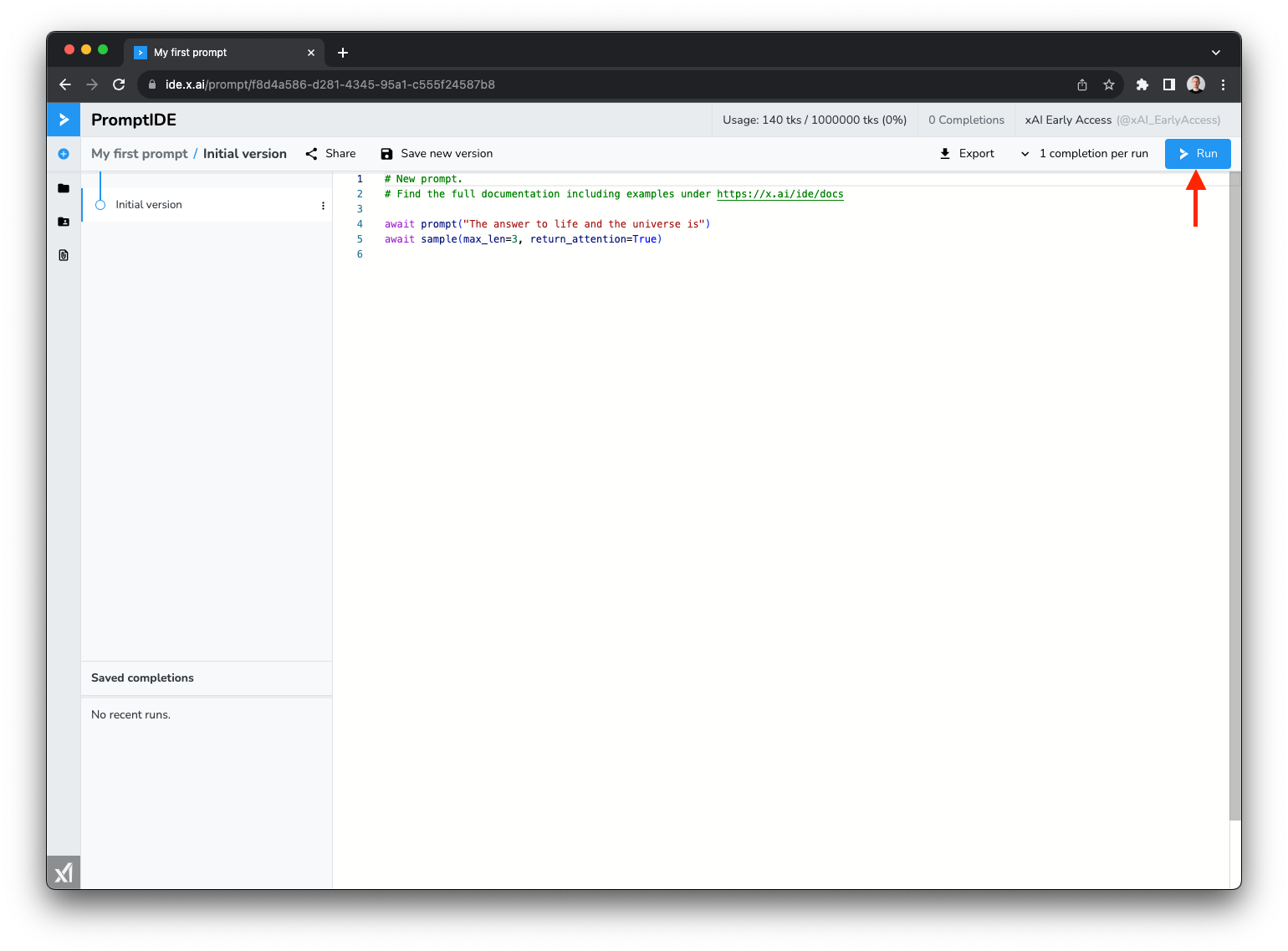

Step 3

You should now see an editor window displaying the default prompt. Before we explain the semantics of the default prompt, let's run it to see the result. To run the prompt, click the blue Run button in the top right hand corner of the screen.

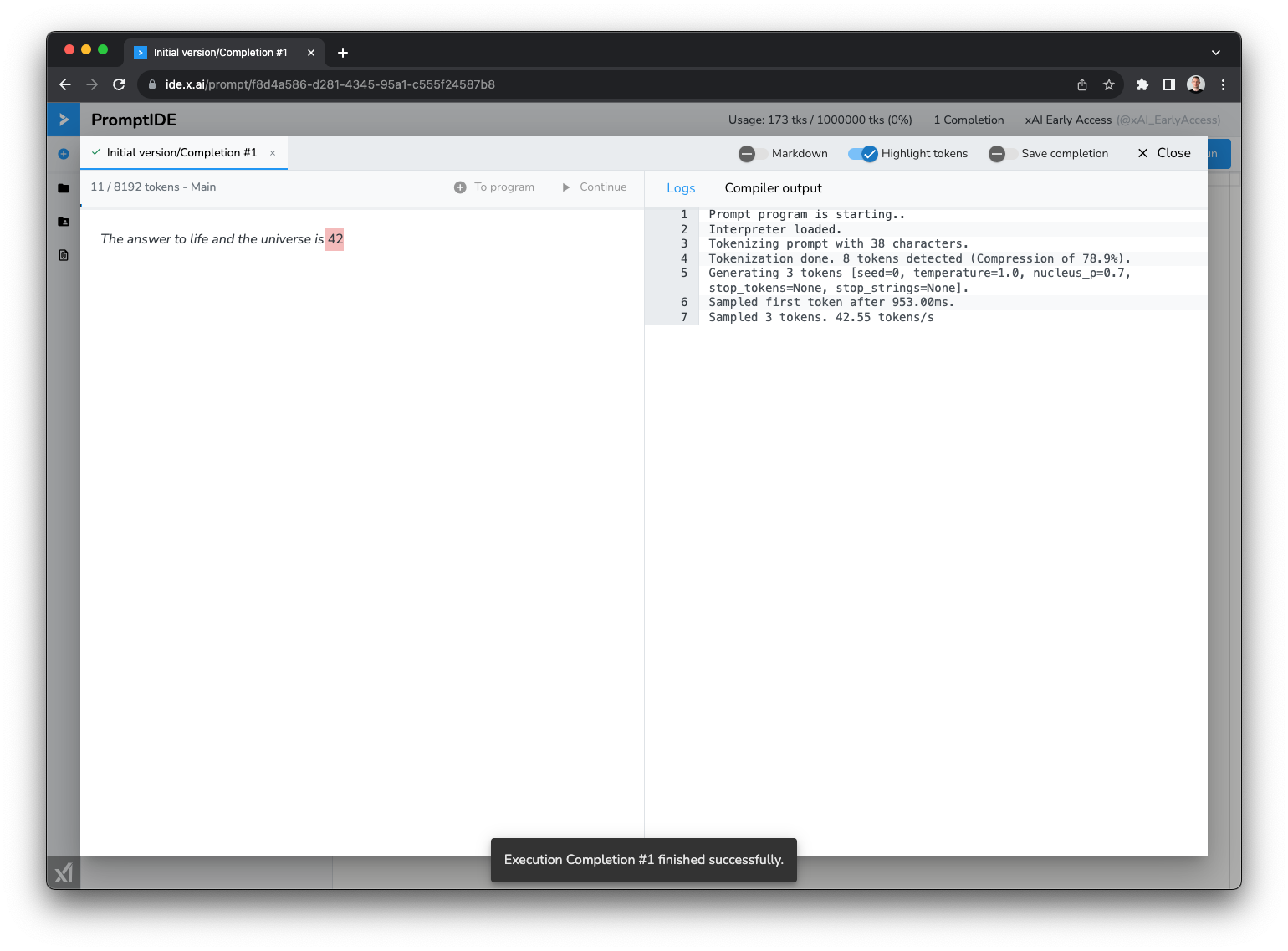

After clicking Run a new dialog window pops up, which is called the Completion window. The dialog has two sides: On the left, you see the current context and on the right you see the logs produced by running your prompt. If you hover your mouse cursor over the text in the context, you can see the tokenization of the prompt. Tokens with a transparent/black background color have been provided by the user (i.e. you) and tokens whose background color is highlighted have been sampled from the model (this behaviour can be configured using the Highlight tokens button in the corner of the completion window).

Let's close the completion window and return to the editor screen to look at the default prompt.

Default prompt

The default prompt consists of two lines that highlight the two most important function of the PromptIDE SDK: prompt and sample:

await prompt("The answer to life and the universe is")

await sample(max_len=3, return_attention=True)

A prompt is always executed in an implicit context where a context is simply a sequence of tokens.

You can add tokens to the current context using the prompt function, which takes an ordinary string, tokenizes

it and adds the corresponding tokens to the context. The sample function allows you to sample new tokens from

our model based on the tokens in the current context. In this example, we sample at most 3 tokens based on the

context "The answer to life and the universe is" and with high probability, the model will sample the tokens 42.

Please take a look at our full SDK documentation to learn more about how contexts work.

On async

The entire SDK uses Python asynchronous programming. This allows easy concurrency in complex prompts. The downside

of using async function is that the user (i.e. you) has to remember to await the results to avoid race conditions.